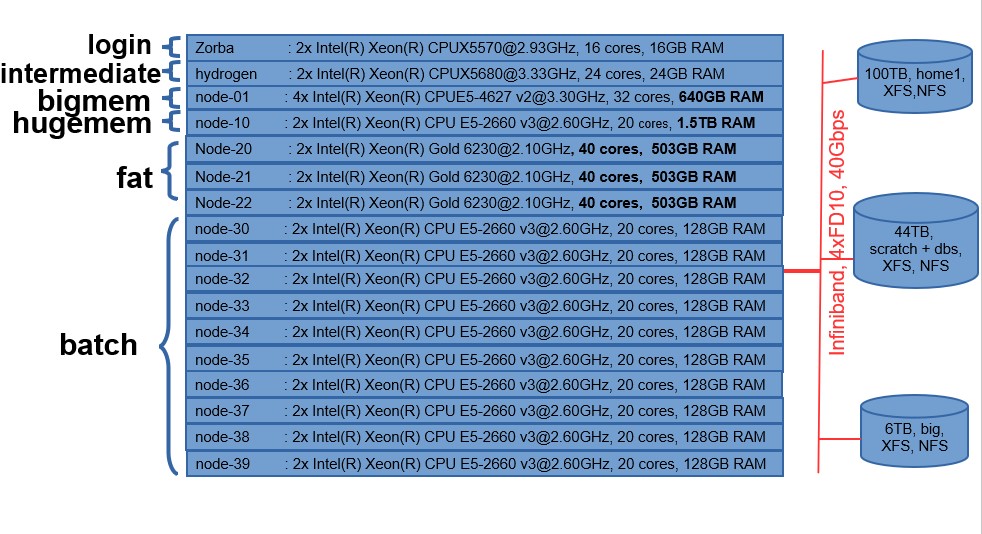

Zorbas is a high performance computing cluster (hpc) consisting of almost 400 cores and 5TB memory in total. There are 4 computing partitions (classes of computers with similar specifications), one login node where users can enter to the system from and an intermediate node where users can test their scripts and/or submit their jobs.

Computing Partitions or Queues

- Bigmem partition can be used for memory intensive jobs. It consists of 1 node with 32 cores and can support processes requiring up to 640GB RAM.

- Hugemem partition can be used for hugely memory intensive jobs. It consists of 1 node with 20 cores and can support processes requiring up to 1.5TB RAM.

- Batch partition is composed by 10 nodes each one with 20 cores and 128 GB RAM. A user can assign parallel jobs (either in a single node or across several nodes) to the batch partition.

- Fat partition consists of 3 nodes with 40 cores and 500 GB RAM. A user can submit highly cpu intensive jobs to this partition.

The four partitions serve the calculating needs of the submitted jobs. Bigmem and Hugemem partitions can support memory intensive jobs (mem until 640GB and 1.5TB respectively) and Batch can handle mostly (but not exclusively) parallel-driven jobs. At last, fat partition purpose is to serve highly cpu intensive jobs.

Interconnection of nodes takes place via infiniband interface of 40Gbps capacity. Home directories are shared via NFS so that data is accessible throughout the whole cluster. Two more file systems (also shared through nfs and infiniband) host software packages (big) and biological databases (dbs). The scratch file system functions as a temporary data storage space where user data can be placed on execution time.

The block diagram of IMBBC High Performance Cluster – ZORBAS- is demonstrated in the following picture.

You can find more information about our infrastructure here.