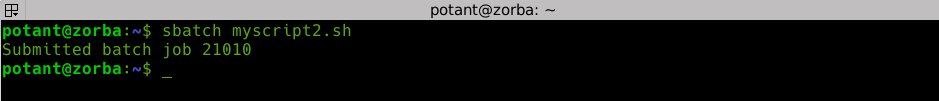

The command sbatch can be used for job submission at zorbas cluster.

In the above example a script file myscript2.sh is fed to sbatch and after submission the job is assigned with the job id 21010. The script must have a specific structure as in the following example.

#!/bin/bash

#SBATCH --partition=batch

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=20

#SBATCH --mem=40000

#SBATCH --job-name="myjob2"

#SBATCH --output=myjob2.output

#SBATCH --mail-user=myname@mail.gr

#SBATCH --mail-type=ALL

mycommand1 -input1 -input2 -output

mycommand2 -input1 -input2 -output1 -output2

...The batch script has to contain options preceded with “#SBATCH” before any executable commands. Executable commands can be any program/script located on linux widely accessible folders (e.g /usr/bin etc) or on users’ home directory or on zorbas’ software directory (/mnt/big/). Please take care that between # and the directive SBATCH, spaces must not exist, otherwise the corresponding line is treated as comment. In the following list some of the primary options of a sbatch file are presented.

| –partition | the partition where the job will be executed. If omitted, resources on the default partition are allocated. Default partition in the current configuration status is minibatch |

| –nodes | the number of nodes that will be allocated. Usually, one node is adequate for one job execution. For more nodes per job, users’ commands/scripts must have adopted special techniques such mpi or slurm arrays. Users must be attentive because pointless allocation of nodes can lead to resource waste. For more details please contact with sys admins. |

| –ntasks-per-node | the number of cores (or tasks or threads, alternatively) that will be allocated per node for the job execution. Users must assure that their commands/scripts support multithreaded execution, so as the optimal core allocation is achieved. If a program/script cannot support multithreading then it should not be invoked with more than one cores (–ntasks-per-node=1) |

| –mem | the memory that will be allocated to the job (in MB). If omitted, the total amount of node memory will be allocated, that in some cases will lead to resource waste. The exact value of memory that a job is going to need, cannot be a priori specified and only experienced users can predict it approximately. Generally, users are recommended to avoid of using –mem. Use of –mem-per-cpu is suggested instead. |

| –mem-per-cpu | the memory (in MB) that will be allocated to each allocated core of the node. Attention! –mem and –mem-per-cpu are mutually exclusive, so users are prompted to use only one of them (preferably the latter). |

| –job-name | job name (the job name is shown in the output of squeue command). For ease of their planning, users are recommended to name their jobs properly |

| –output | the name of the file where standard output and standard error will be written to |

| –mail-user | the email where email notifications regarding the job execution will be send to |

| –mail-type=ALL | notifications regarding with the start, end and failure of the job are sent. |